Initial engagements with AI technologies, often characterized as “naive AI,” risk creating brittle, unmanageable, and ultimately disappointing solutions. The key to success in AI lies in applying a deliberate and strategic “well-architected” approach that leverages the already diverse solution stack in this rapidly evolving new technical domain.

This article is a guideline for architects. It aims to dissect the difference between short-sighted AI implementation (“let’s just use RAG”) and thoughtfully designed AI systems. More importantly, it introduces a set of pivotal technologies and approaches that are forming the AI solution landscape, offering architects a significantly enlarged toolbox to build robust, scalable, and impactful AI-powered systems.

‘Naive’ AI: Typical Stumbling Blocks

In the initial phase of AI integration, many organizations fall short with quick-win solutions and superficial integrations. This often results in what we classify as “naive AI.” Characteristics of this approach include:

- Treating Models as Black Boxes: Deploying pre-trained models without understanding their limitations, biases, or internal workings. This leads to unpredictable behavior and makes debugging and fine-tuning nearly impossible.

- Ignoring Data Strategy: Focusing solely on model training without a comprehensive plan for data collection, storage, quality, governance, and lineage. AI models are only as good as the data they are trained on, and poor data hygiene cripples even the most advanced algorithms.

- Lack of Lifecycle Management: Deploying models as static entities without planning for continuous monitoring, retraining, versioning, or retirement. AI models degrade over time as data distributions shift (model drift), rendering them ineffective.

- Poor Integration: Bolting AI components onto existing systems without considering seamless data flow, API design, error handling, and overall system resilience. This creates silos and operational headaches.

- Neglecting Non-Functional Requirements: Overlooking crucial aspects like security, privacy (especially with sensitive data), compliance, scalability, and cost management in the haste to deploy AI.

- Absence of Human Oversight and Explainability: Deploying AI in critical processes without mechanisms for human intervention or understanding why a model made a particular decision. This erodes trust and limits adoption in regulated industries.

- Isolated Optimizations: Focusing solely on improving a model’s accuracy score on a benchmark dataset, rather than evaluating its performance and impact within the broader business process and system context.

- Artificially Limited Solution Space: Without comprehensive knowledge over a reasonable set available AI technologies and their pros/cons, implementors choose headline technologies over doing deeper research. The first visible technology is applied without prior due dilligence.

Naive AI implementations are prone to failure, difficult to maintain, expensive in the long run, and can even introduce new risks. They often deliver limited business value, leaving stakeholders disillusioned with AI’s potential.

The Principles of Well-Architected AI

In contrast, a well-architected AI system is built upon solid engineering principles, strategic foresight, and a deep understanding of the specialties that come with building on AI. Architects should approach AI by adhering to:

- Data-Centricity: Recognizing data as a first-class citizen. This involves establishing robust data pipelines, implementing strong data governance, ensuring data quality, and thinking strategically about data as a product.

- Adaptability and Continuous Improvement: Building systems that can evolve. This includes strategies for monitoring model performance, efficiently updating or specializing models, and incorporating feedback loops.

- Reliability, Resilience, and Observability: Implementing robust error handling, monitoring, logging, and alerting mechanisms to ensure the AI system is dependable and its behavior can be understood.

- Security and Governance by Design: Integrating security controls, privacy measures, and compliance requirements from the outset, rather than as an afterthought.

- Human-AI Collaboration: Designing interfaces and workflows that facilitate effective collaboration between human users and AI components, including mechanisms for oversight and correction.

- Holistic System Optimization: Evaluating and optimizing the end-to-end system performance, considering factors like latency, throughput, cost, and business impact, not just isolated model metrics.

- Ethical AI and Responsible Deployment: Actively addressing potential biases, ensuring fairness, and establishing guardrails to prevent misuse or harmful behavior.

- Modularity and Composability: Designing AI systems as a collection of interoperable components (models, data services, agents, tools) that can be independently developed, deployed, and scaled.

Well-architected AI systems are a much better fit for the job, last longer and yield better results. They deliver tangible business value and can adapt to changing requirements and data landscapes. Achieving this requires architects to look beyond simplistic machine learning concepts and incorporate new paradigms and technologies into their toolkit.

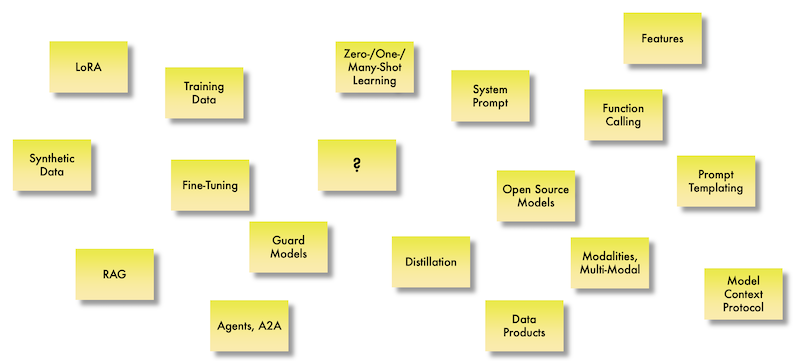

Navigating the Modern AI Solution Space: Key Technologies

We’ll continue with a crucial set of technologies and approaches that form the bedrock of the well-architected AI tech stack. Understanding and strategically employing these elements significantly enlarges the solution space for building sophisticated AI applications. Let’s explore them:

Data Foundation: The Bedrock of AI

- Training Data: At its core, AI relies on data for learning. Architects must consider the entire pipeline for acquiring, cleaning, annotating, and storing training data. The volume, variety, and velocity of data directly impact model performance. A well-architected approach involves versioning of data, features, models and model evaluations.

- Synthetic Data: Generating artificial data that mimics the statistical properties of real-world data. This is invaluable when real data is scarce, expensive to collect, sensitive (privacy concerns), or biased. Architects can leverage synthetic data to augment training sets, improve model robustness to edge cases, and test system behavior under various scenarios without using sensitive production data.

- Features: These are the input variables that a model uses to make predictions or decisions. Feature engineering – the process of transforming raw data into features that better represent the underlying problem to the model – remains a critical skill. Architects need to consider platforms and pipelines for automated feature engineering and managing feature stores to ensure consistency and reusability across different models and applications.

- Data Products: Shifting the perspective from data as merely raw material to data as a shareable, valuable product with defined ownership, quality standards, and discoverability. This aligns with Data Mesh principles and is essential for providing reliable, curated datasets for AI development and deployment across the enterprise. Architects can design data product pipelines that serve the specific needs of various AI initiatives, ensuring data is trustworthy and easily accessible.

Model Adaptation and Efficiency: Tailoring Intelligence

- Fine-Tuning: The process of taking a pre-trained model (often a large foundation model) and further training it on a smaller, task-specific dataset. This adapts the model’s capabilities to a particular domain or task without training a model from scratch. Architects can use fine-tuning to leverage the power of large models while specializing them for enterprise use cases, improving accuracy and relevance.

- LoRA (Low-Rank Adaptation): A lightweight fine-tuning technique. Instead of updating all the weights of a large pre-trained model, LoRA injects small, trainable matrices into specific layers. This drastically reduces the number of parameters that need to be trained, making fine-tuning faster, less computationally intensive, and requiring significantly less storage for adapted models. For architects, LoRA offers a practical way to customize large models for numerous downstream tasks without the overhead of full fine-tuning, enabling broader adoption and experimentation.

- Distillation: Training a smaller, “student” model to mimic the behavior of a larger, more complex “teacher” model. The student model learns to reproduce the teacher’s outputs, often achieving comparable performance but with significantly fewer parameters and computational requirements. Architects can use distillation to create smaller, faster, and cheaper models for deployment in resource-constrained environments (like edge devices) or for applications requiring low latency.

Interaction and Control: Guiding AI Behavior

- System Prompt: In the context of GenAI, this is an initial instruction or context provided to the model to define its persona, constraints, or overall goal for a conversation or task. Architects designing AI assistants or conversational interfaces can use system prompts to enforce desired behavior, ensuring the AI stays on topic, adheres to safety guidelines, or adopts a specific tone.

- BAML (Boundary ML): A domain-specific language and framework to bring rigor to prompt engineering. BAML treats prompts as type-safe functions with defined inputs and structured outputs (schemas). This allows architects and developers to define the expected structure of an AI model’s response, making it easier to parse and integrate AI outputs into downstream systems. BAML provides tooling for testing prompts, ensures reliability in extracting structured data, supports various models and programming languages, and helps manage prompts systematically at scale. For architects, BAML offers a way to build more robust, maintainable, and less brittle AI applications by adding structure and reliability to the prompt layer.

- Zero-/One-/Many-Shot Learning: Architects can leverage these techniques, often through careful prompt design, to quickly adapt general-purpose models to new tasks without the need for extensive fine-tuning, speeding up development and reducing data requirements for new applications.

- Zero-Shot Learning: The model performs a task it hasn’t seen examples for, relying solely on its broad pre-training knowledge based on the prompt instruction.

- One-Shot Learning: The model is given a single example of the task in the prompt before being asked to perform it. Often the format of the expected response is being specified in the prompt.

- Many-Shot Learning (or Few-Shot Learning): The model is given several examples of the task in the prompt.

- Function Calling: The ability of a language model to identify when a user’s request can be fulfilled by calling an external tool or API and to output a structured format (e.g., JSON) specifying the function to call and its arguments. This is a critical pattern for connecting language models to real-world systems and enabling them to take actions (e.g., booking a flight, sending an email, querying a database). Architects can design systems where language models act as intelligent orchestrators, interacting with backend services via function calls.

- Prompt Templating: Creating reusable structures or templates for prompts. This allows for dynamic insertion of user input, data, or context into a pre-defined prompt structure. Architects can use prompt templating to standardize interactions with AI models, ensure consistency, and simplify the creation of complex prompts for different use cases. This needs a deeper level of control over the AI system as with the previously mentioned methods.

- Model Context Protocol (MCP): An emerging standard to facilitate communication between AI models/agents and the tools/data sources they need to interact with. Similar to how APIs standardize communication between services, MCP aims to provide a universal way for models to understand and utilize external functions and data, enhancing their ability to operate in real-world workflows. Architects designing agentic systems or applications that require models to interact with enterprise data and tools will find MCP essential for building interoperable and scalable solutions.

System Design Patterns: Building Blocks for Intelligent Applications

- RAG (Retrieval Augmented Generation): A pattern where a language model’s ability to generate text is augmented by retrieving relevant information from an external knowledge base. When a user asks a question, the system first retrieves relevant documents or data snippets from a source (e.g., a document database, a knowledge graph) and then provides this retrieved context to the language model along with the user’s query. The model then uses this combined information to generate a grounded and more accurate response, reducing hallucinations and enabling the use of up-to-date, domain-specific information. Architects can implement RAG to build chatbots, question-answering systems, and content generation tools that leverage proprietary or constantly updated data.

- Agents, A2A (Agent-to-Agent): AI agents are autonomous or semi-autonomous programs that can perceive their environment, make decisions, and take actions to achieve goals. They often leverage language models for reasoning and planning and use tools (via Function Calling) to interact with the world. The Agent-to-Agent (A2A) protocol is an open standard enabling different AI agents, built by various teams or organizations, to discover each other’s capabilities and collaborate on complex tasks. For architects, designing with agents and A2A opens up possibilities for building sophisticated, distributed AI systems where different specialized agents work together, breaking down monolithic AI applications into more manageable and interoperable components.

- Modalities, Multi-Modal: AI models are increasingly capable of processing and generating information across different modalities, such as text, images, audio, and video. Multi-modal AI systems can understand the content of an image and generate a caption, listen to speech and transcribe it while analyzing the speaker’s emotion, or generate video from a text description. Architects designing applications that require understanding or generating rich, multi-sensory content will leverage multi-modal models and consider the infrastructure needed to handle diverse data types.

Governance and Safety: Ensuring Responsible AI

- Guard Models: These are models or mechanisms specifically designed to monitor the inputs and outputs of other AI models (especially generative ones) to ensure they adhere to safety guidelines, ethical principles, and desired behavior. Guard models can filter out harmful content, detect bias, or ensure responses stay within defined boundaries. Architects must incorporate guard models and other safety layers into their AI architectures to mitigate risks associated with deploying potentially unpredictable AI systems, particularly in sensitive applications.

Model Selection Strategy: Openness and Flexibility

Open Source Models__: The increasing availability of powerful, open-source AI models offers architects significant flexibility and control. Unlike proprietary models, open-source alternatives allow for greater transparency, customization (through fine-tuning or LoRA), and deployment across various infrastructure environments. Architects can strategically choose between open-source and proprietary models based on cost, performance requirements, customization needs, and vendor lock-in considerations.

Architectural Considerations and the Path Forward

Adopting this modern AI tech stack requires architects to think beyond traditional software architecture. Key considerations include:

- Data Infrastructure: Investing in scalable and flexible data platforms that can handle diverse data types, support real-time processing, and enable efficient data governance and product creation.

- MLOps and AIOps: Establishing robust pipelines and practices for automating the deployment, monitoring, and management of AI models and agentic systems. This includes continuous integration/continuous deployment (CI/CD) for models, automated performance monitoring, and infrastructure management for AI workloads.

- Tooling and Frameworks: Selecting appropriate libraries, frameworks, and platforms that support the technologies discussed, considering factors like ease of use, community support, scalability, and integration capabilities.

- Security and Privacy: Implementing strong authentication and authorization mechanisms, data encryption, differential privacy techniques, and compliance frameworks (e.g., GDPR, HIPAA) to protect sensitive data and AI systems.

- Talent and Skills: Recognizing the need for teams with expertise in data engineering, MLOps, prompt engineering, and specialized AI techniques.

- Cost Management: Carefully evaluating the computational and storage costs associated with training, fine-tuning, deploying, and running AI models, especially large language models, and using techniques like distillation and LoRA to optimize resource usage.

Architects have the opportunity to combine all available AI elements in novel ways to create powerful and intelligent solutions that were previously impossible. For instance, combining RAG with Function Calling allows a language model to answer questions based on internal documents and then take action based on the retrieved information. Implementing agents that communicate via A2A, leveraging multi-modal capabilities and guarded by safety models, can lead to highly autonomous and reliable enterprise workflows.

Conclusion

The landscape of AI is evolving at a breathtaking pace, presenting both immense opportunities and significant challenges for enterprise and software architects. The difference between successfully leveraging AI and falling into the traps of naive implementation lies in a commitment to well-architected principles and a deep understanding of the modern AI tech stack.